How to iterate over the array in JavaScript and the root of all evil

I will start with an anecdote.

At my work, I am responsible for building a service. It's not important what exactly it does. What is important is that to fulfill the business requirements, it should crunch the ever-growing array of data. And by crunch, I mean iterate over this array multiple times on every run.

Here is a very simplified version of that business requirement: convert Entity to SuperEntity, where SuperEntity is an Entity that has value > 2, has more than 1% of all entities in the group, and has the groupLength property.

Here are the types of both Entity and SuperEntity:

Again this is a very simplified version. In the real world, we also need MegaEntity and TurboDieselEntity.

So, our input is an array of Entities. Here is how we generate it:

And because we will need to access group length, let’s build a lookup map beforehand.

Now we are ready to write our Entity to SuperEntity transformer. The naive approach would look like this:

It looks nice and tidy. But remember the part of the task where input will constantly grow? That means, at some point, input will become so big that we will notice performance penalties from creating intermediate arrays on each step and iterating over them. Let's rewrite it with for loop.

Performance wise it should be much better. No intermediate arrays, no array resizes, and no extra iterations. What I do not like is the decreased readability. Our nice flat eleven-line function became longer and curvier. Let's fix the readability issue with generators.

Generators are a special function that, instead of computing everything in one go and returning a result, returns an iterator that will compute the next chunk when you request it. This means that we can write the map and filter using generators.

The map is straightforward.

The filter is a bit complex because of the type definition of the predicate. We want to use type guards and functions that return a boolean as a predicate.

;

;

;

Okay. Now we got everything we need. Let's write our transformer once more with helpers from above.

We are flat again. We do some extra work in the end because we need to convert our iterable to the array. Performance-wise this version should be slower than for loop version because generators should have some cost, but faster than the first attempt because we iterate only once and do not create any intermediate arrays.

At this point, I was so proud of myself. I thought, why nobody speaks about generators? And I should publish those small helper functions as a library. But then my teammate asked if I was sure the generator approach was that fast. I was sure, but spoiler alert I was wrong. Don’t be like me. Always write benchmarks when performing performance optimizations.

Benchmarking is hard to do, so if your aim is not a new benchmarking framework, use an existing one. I chose benny. Why? It was first in Google results. So no actual research there, but it does everything I need and even can create an HTML page with nice visualization. Here is the benchmark code:

And here is the result:

# npx ts-node src/benchmark.ts

|

for

|

| I expected a more significant difference between array methods and for-loop. But let's get back to our elephant in the room. Why are generators so slow?

I went to the V8 source code to find the answer to this question. But it is surprisingly hard to read and navigate. So I went on a googling quest. Nobody seems to be concerned about whole generators being slow situation. All the info I found was from 2013-2015, and the conclusion is that generators are slow. That was no news to me. I am interested In finding out why. Last resort, the spec. I fell asleep multiple times while reading it, but it looks like I know the answer.

TLDR: It is slow because the generator function will manipulate the call stack on every iteration.

The spec says that the generator should create an alternative call stack, and on every iteration, it will insert this call stack into the program call stack and execute it until the yield statement. Yield will update the call stack of this generator and remove it from the program call stack. This is what makes generators slow.

If that explanation does not help, imagine my handwaving. You are welcome. And if you want to check everything by yourself, here is the link to the ECMA-262 27.5.3 in full glory.

But! But we can create iterators by ourselves, right? Generators are excellent when you want to make any arbitrary function return an iterator, and we need only two functions.

Here is the map function:

Here is the filter:

;

;

;

Yeah, I remember that we are solving the readability problem of the for-loop version. And with helpers like that, it makes the readability problem even worse. But since we are already here, let's benchmark it anyway.

Guess how our transformer function looks?

Here is the updated benchmark:

And here is the result:

# npx ts-node src/benchmark.ts

|

for

|

|

| Here we are. I will use this post as proof that any performance optimization made upfront is useless. I mean, there is no sense in using generators for performance optimization. And I think we can agree that there is no sense in using iterators to solve the readability and performance problems. So we left with array methods and for-loop, where the difference is around 5%.

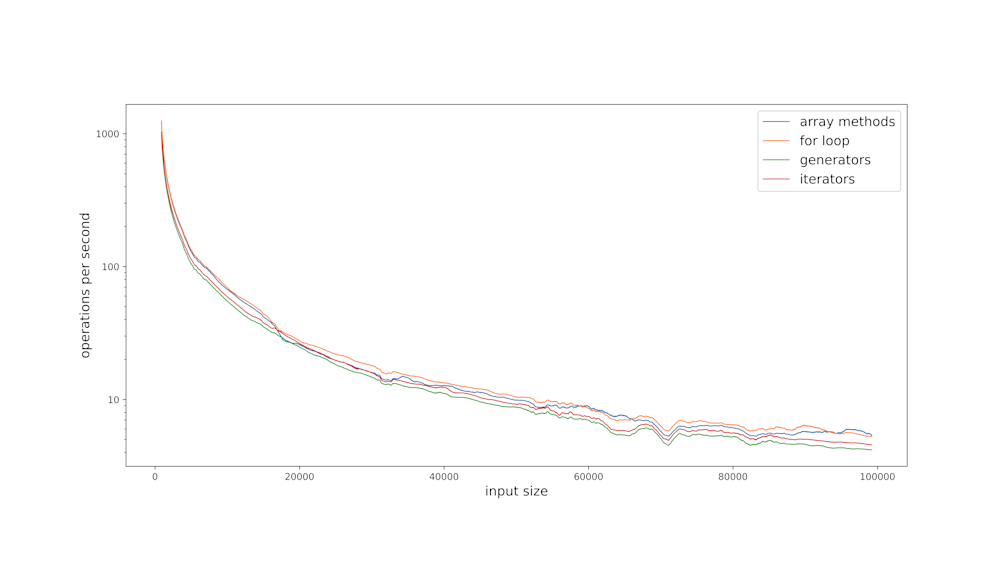

When you are in the measuring contest, it’s hard to stop. And I have a few questions to ask. For example, how different are the results for different input sizes?

There is nothing visible on a linear scale, so this is a logarithmical one. And even though I was expecting for-loop to be a winner with a big margin, in reality, things are different.

Do you see? Something strange happens around 20000 and 65000 input sizes. I rerun the whole thing multiple times on different machines, and it is still there. If you know what it is and why it is there, drop me a message, I would also like to know.

I also wanted to add a nodejs vs deno vs bun comparison. But deno does not have JSON output from the benchmarking tool yet, and I am too lazy to parse console output.

But let's get back to generators. Why may you still want to use them? You may want to use generators when you get data in chunks, and those chunks are arriving slower than you can process them. Imagine any IO operation, reading from the filesystem, network, etc. Instead of waiting till all the chunks are received, you can start processing the data while waiting for the next chunk. You can do that with streams or generators. I find stream API hard to make type-safe and compose. This leaves only one real option - generators. If you want an example, check out readline API, and imagine how easily you can apply map and filter helpers from the above.

Let's stop here. I hope that you had fun reading this. I had fun writing it. And I feel it lacks some bullet points in the end, so here we go.

Mandatory bullet points at the end:

- Newer do a performance optimization without benchmarks.

- Generators in javascript are slow.

- Array methods in Javascript are surprisingly fast.

specs and links

I ran all those benchmarks on the cheapest Hetzner server (CX11), which has one vCPU and 2GB of RAM. With Ubuntu 22.04.1 LTS and NodeJS 18.12.1 on top.

You can find all the code, including benchmarks and Jupyter notebook, in the GitHub repo.